Define a Build Job

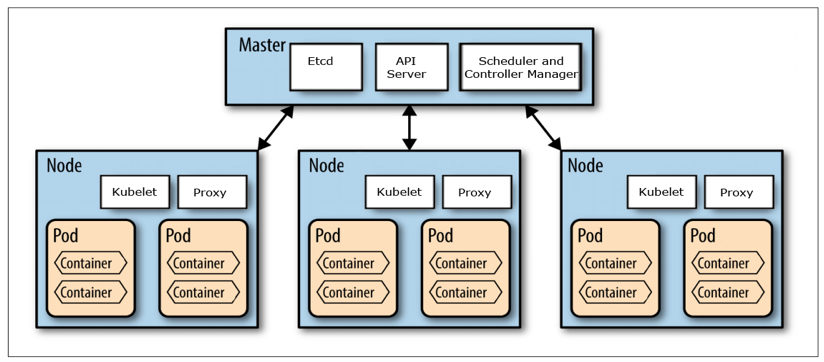

Build jobs are the way you define automated tasks that your CI/CD platforms execute. For this Refcard, we’ll use Oracle Developer Cloud Service as an example, which provides a CI/CD engine that orchestrates and executes these build jobs. Developer Cloud Service can also automate CI/CD for various types of software deliverables, but in this section, we’ll focus on automating the cycle for Docker containers.

A build job for Docker containers can include several steps – all of them leverage the Docker command line to execute activities on your definition files and the images generated from them.

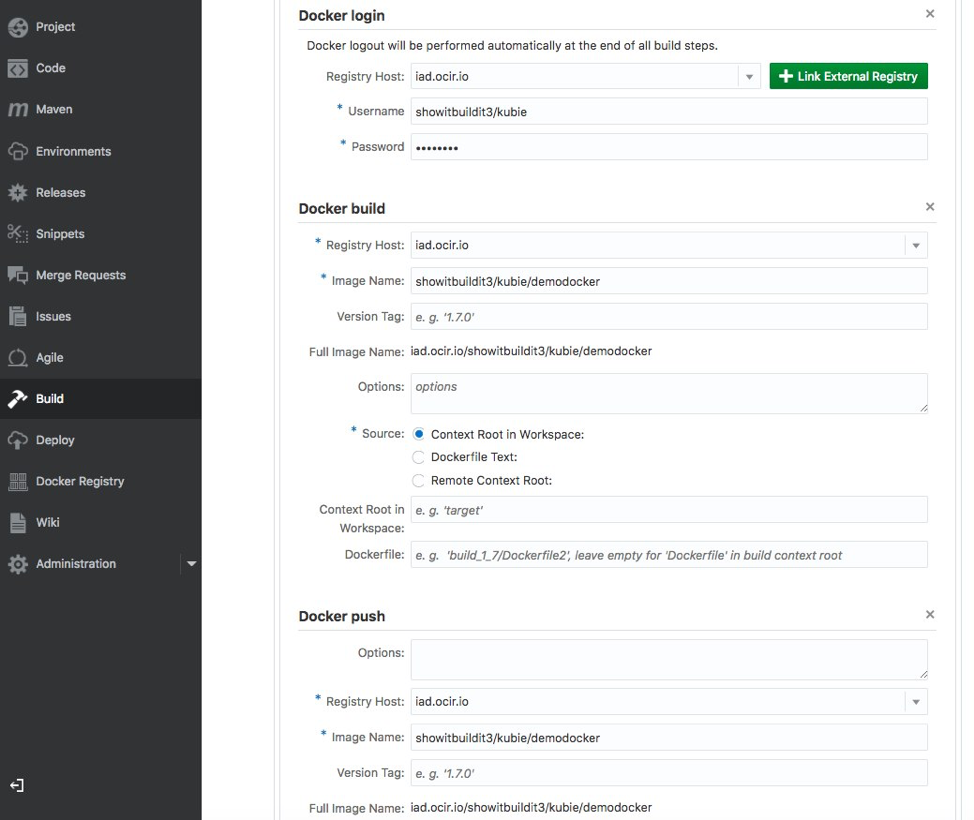

First, you’ll want to build the Docker image to verify that it is configured properly. In the build step, you configure where the definition files are located (for example, in your Git repository’s root directory). You might want to add a specific tag to your image so you can easily manage multiple versions. This can be done either as part of the build or in an independent build step.

Next. you might want to publish the built Docker image to a repository of images – which might mean that you’ll want to first login into that repository. There are public repositories, such as DockerHub, as well as private repositories, such as the one provided by the Oracle Cloud Infrastructure Registry.

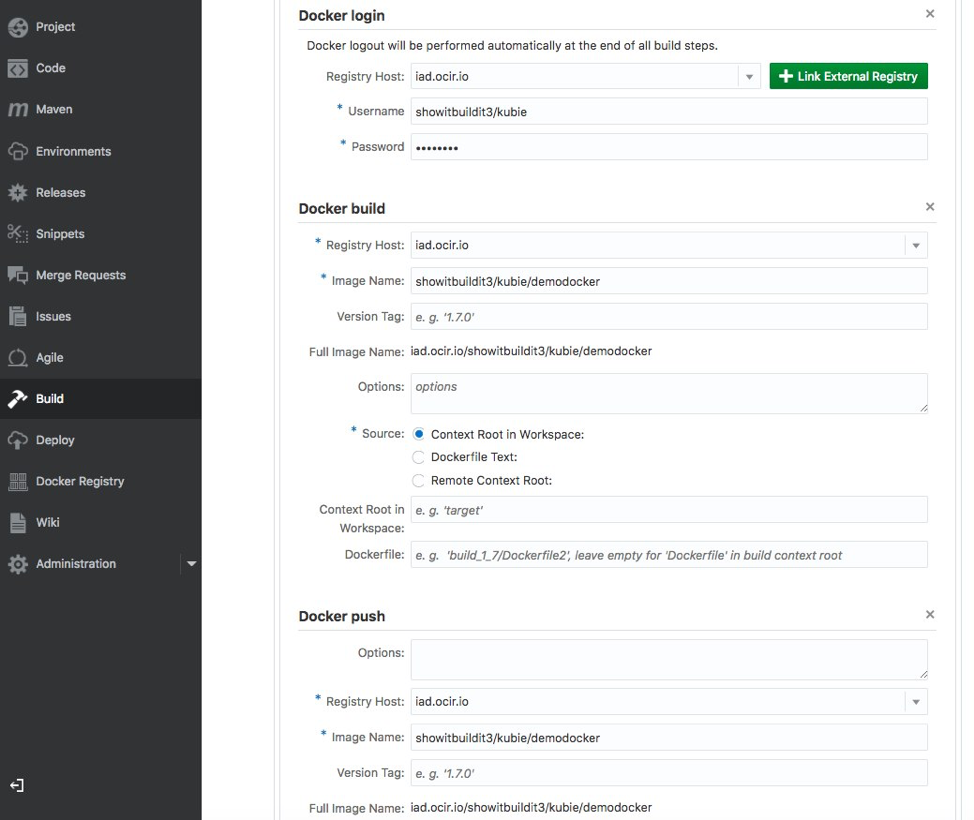

In Developer Cloud Service, you can sequence all of these steps in a single build job or break them into separate jobs. Developer Cloud Service also supports a variety of other Docker commands, and all of them can be defined in a declarative way, reducing chances of errors in your build scripts.

A simple set of steps defined in a declarative way in Developer Cloud Service to publish a Docker image to Oracle’s Docker Registry

Another option is to just write a shell script with the Docker commands manually and execute it in your CI/CD flow.

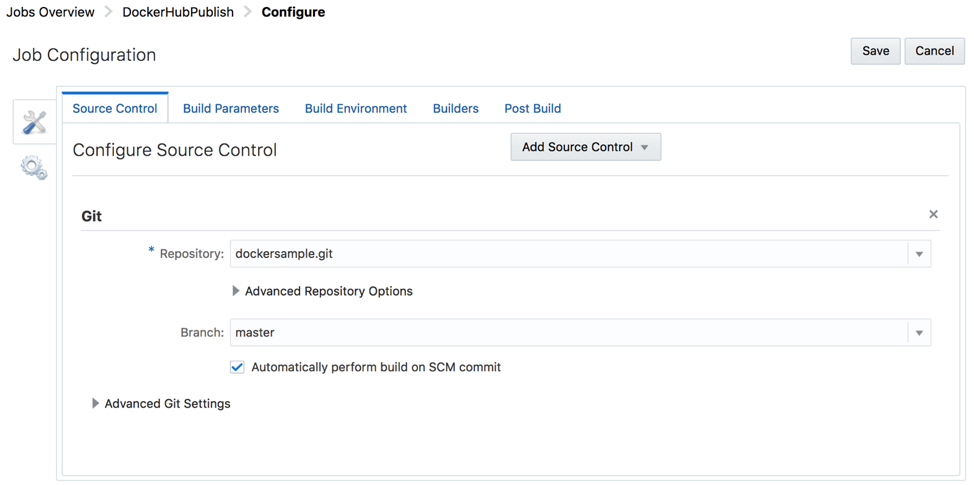

Tie the Build Job to Your Git Repository

Adopting the “Infrastructure as Code” approach means that your Docker container definition files should reside in a version management repository, like any other piece of code your team produces.

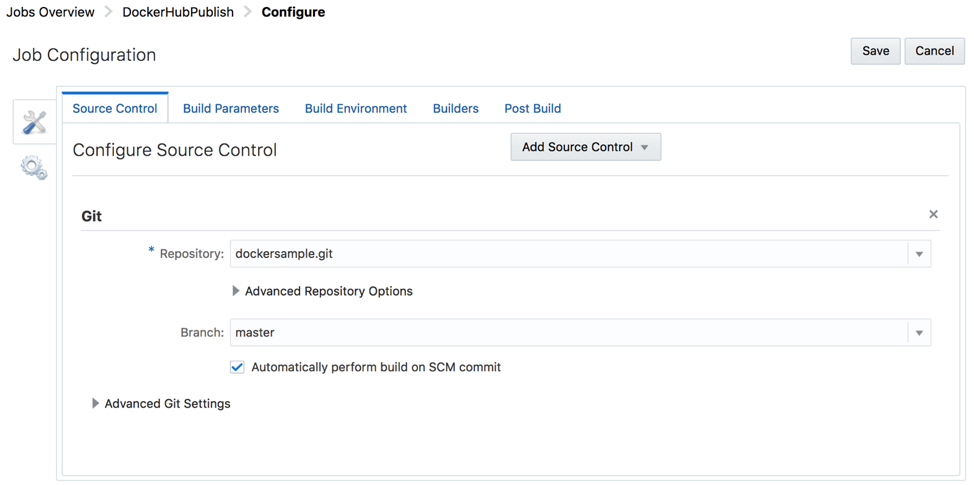

In Developer Cloud Service, you can tie your build jobs to hook up with your Git-based source code repositories. You can dedicate specific build jobs for specific branches of your code.

Automate Execution of Build Job

Usually, you’ll aim for the build job to automatically execute whenever someone changes the code that your CI/CD relies on. This will mean hooking up your source repository with your build job through your CI/CD engine.

Some teams automate execution of Docker builds based on a schedule. For example every night, after the team is done with that day’s development effort, a build job will pick up the latest changes and deploy them on QA instances.

In Oracle Developer Cloud Service, for example, you can check a box that tells a build job to automatically start when a change has been made to a specific branch of your Git repository. You’ll usually associate this with changes to the master branch, which will contain code that is “production ready” and has passed peer review. Alternatively, you can set a schedule for your build jobs to automate execution based on a specific schedule.

Build job configured to pick code from a specific branch of the git repository and execute automatically when changes have been done to this branch.

Now that we have created the Docker containers and published them, the next step is getting them deployed and running on a Kubernetes Cluster. First, we’ll want to provision this environment.

Getting a Kubernetes cluster up and running, let alone a production-ready one, has not historically been quite as straightforward. While purists (and those learning Kubernetes) might choose to stand up a Kubernetes cluster the hard way – most of us are looking for easy and automated ways to make this happen. There have been a (large) number of projects from the vendor and Kubernetes community in this area, many in various stages of ongoing development.

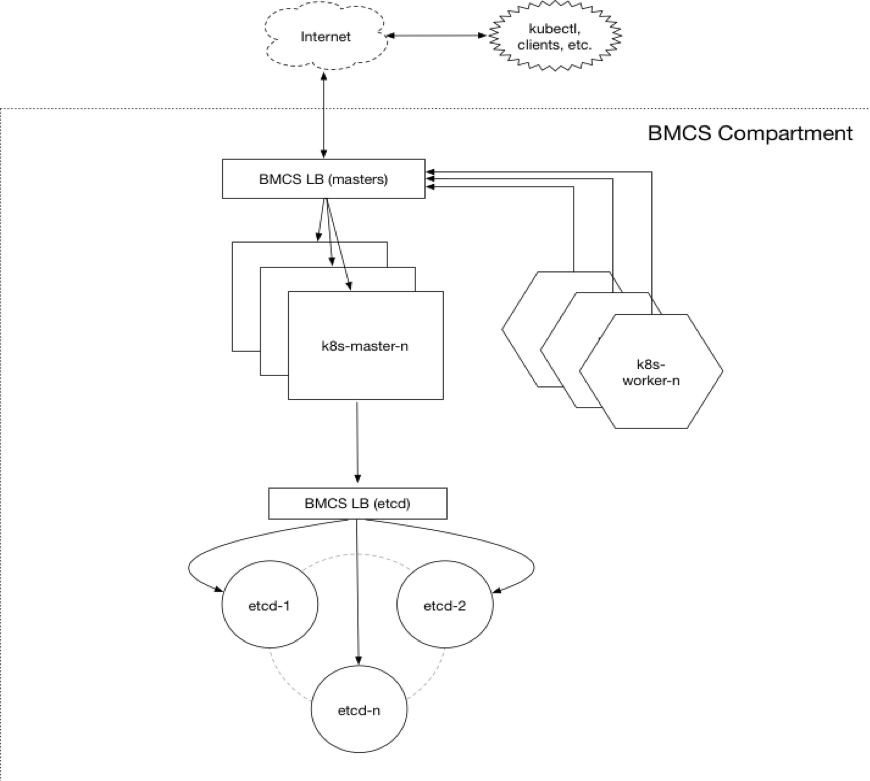

The Terraform Kubernetes Installer is an open source Terraform template for easily standing up a Kubernetes Cluster on Oracle Cloud Infrastructure (OCI). This allows customers to combine the production-grade container orchestration of Kubernetes with the control, security, and predictable performance of a cloud platform.

What it Does

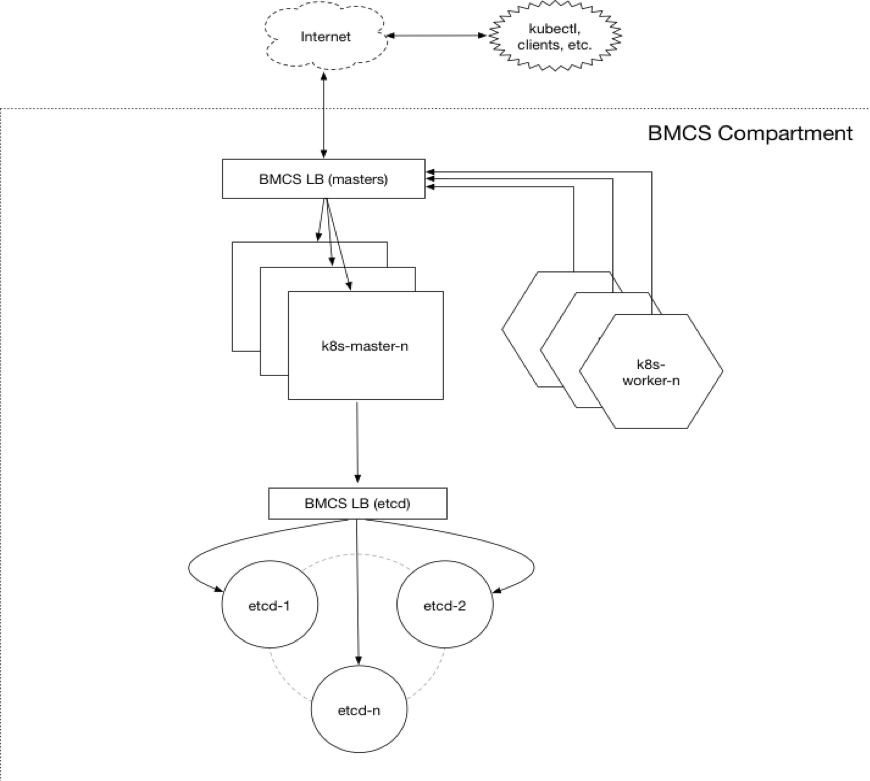

The Terraform Kubernetes Installer provides a set of Terraform modules and sample base configurations to provision and configure a highly available and configurable Kubernetes cluster on Oracle Cloud Infrastructure (OCI). This includes a Virtual Cloud Network (VCN) and subnets, instances for the Kubernetes control plane to run on, and Load Balancers to front-end the etcd and Kubernetes master clusters.

The base configuration supports a number of input variables that allow you to specify the Kubernetes master and node shapes/sizes and how they are placed across the underlying availability domains (ADs).

You can specify Bare Metal shapes (no hypervisor!) in addition to VM shapes to leverage the full power and performance of OCI for your Kubernetes clusters. The nodes are also labeled intelligently, like with the Availability Domain, to support Kubernetes multi-zone deployments so that the Kubernetes scheduler can spread pods across availability domains. You can also add and remove nodes from your cluster using Terraform as documented in the README.

If your requirements extend beyond the base configuration, the modules can also be used to form your own customized configuration.

Provision a Kubernetes Cluster via CLI

Prerequisites

- Download and install [Terraform] (https://terraform.io/) (v0.10.3 or later).

- Download and install the [OCI Terraform Provider] (https://github.com/oracle/terraform-provider-oci/releases) (v2.0.0 r later).

- Create an Terraform configuration file at

~/.terraformrc that specifies the path to the OCI provider.

- Ensure you have Kubectl installed if you plan to interact with the cluster locally.

Customize the Configuration

Create a terraform.tfvars file in the project root that specifies your configuration.

# start from the included example

$ cp terraform.example.tfvars terraform.tfvars

- Set mandatory OCI input variables relating to your tenancy, user, and compartment.

- Override optional input variables to customize the default configuration.

Deploy the Kubernetes Cluster

$ terraform init

$ terraform plan

$ terraform apply

Access the Cluster

Typically, this takes around 5 minutes after the terraform apply and will vary depending on the overall configuration, instance counts, and shapes. A working kubeconfig can be found in the ./generated folder or generated on the fly using the kubeconfig Terraform output variable.

Provision a Kubernetes cluster automatically in a CI / CD pipeline

Oracle Developer Cloud Service supports HashiCorp Terraform in the build pipeline to provision Oracle Cloud Infrastructure as part of the build pipeline’s automation.

To execute the Terraform scripts that, for example, provision a Kubernetes cluster as part of a CI/CD pipeline, you need to upload the scripts to the Git repository.

Pushing Scripts to Git Repository on Oracle Developer Cloud

Command_prompt:> cd <path to the Terraform script folder>

Command_prompt:> git init

Command_prompt:> git add --all

Command_prompt:> git commit --m "<some commit message>"

Command_prompt:> git remote add origin <Developer cloud Git

repository HTTPS URL>

Command_prompt:> git push origin master

How to Create a Terraform Build Job

- Open your Developer cloud Service Project

- Go to Build

- Add ‘New Job’

- Give it a name and choose your software template

- Configure your Job

- In ‘Source Control’ add your Source Control Git and select your > Terraform Repository

- Go to the ‘Builders’ tab and add a ‘Builder’ Unix Shell Builder. > Here you type your needed commands.

- Go to the ‘Post Build’ tab and under ‘Post Build Actions’ select > ‘Artifact Archiver’ and type what file(s) you want to add to the > Archive after the build.

- Now Save it.

- Now you can run your job.

- You can use the ‘Build Log’ to check what is going on and the > Compute Service Console to see the output.

Deploy container artifacts from a registry to Kubernetes (target environment)

To deploy Docker images to a Kubernetes environment, you’ll use the kubectl command line and create scripts that you can then run as part of your CD process.

The most important command is the kubectl create command, which deploys an application from a definition file. You can use the kubectl get commands to monitor the nodes, services, and pods you create. Note that you’ll want to have access to the kubeconfig file for the cluster you are working on.

Below you can see an example shell script that was used to deploy a Docker image to a cluster.

kubectl get nodes

kubectl create -f nodejs_micro.yaml

sleep 120

kubectl get services nodejsmicroJODU-k8s-service

kubectl get pods

kubectl describe pods

Summary

Containers improve and simplify the Continuous Integration and Continuous Delivery cycle. Combining a cloud runtime platform for Docker and Kubernetes with an end-to-end DevOps automation platform allows you to effectively leverage these technologies, thereby improving your team’s development and deployment cycles.

{{ parent.title || parent.header.title}}

{{ parent.tldr }}

{{ parent.linkDescription }}

{{ parent.urlSource.name }}