How LangChain Enhances the Performance of Large Language Models

Explore how LangChain can enhance the capacity of Large Language Model applications. Learn how these factors improve performance more efficiently.

Join the DZone community and get the full member experience.

Join For FreeWhat do you think of the Artificial Intelligence Development market? Well, as per a Markets and Markets report with a CAGR of nearly 36.8% for 2023-30, things are continuously changing and growing. This has paved the path for Large Language Models (LLMs) to do things they couldn’t before. There's a new technique called "LangChain" that has the potential to completely change how we use LLMs in generative AI development. In this dive, we will go deep into LangChain. Covering everything from its key principles to how it can be used in real-world applications. You'll have a better understanding of how it’s going to change the way AI generates content when you’re done.

The Concept of LangChain

LangChain is exciting because it takes the powerful capabilities of Large Language Models, or LLMs, like GPT-3, and puts a spin on it. While LLMs are pretty impressive, there are times when they just can’t write with the finesse that humans can. They lack proper grammar, style, and context. In comes LangChain fixes this by using multiple specialized models that work together in perfect harmony.

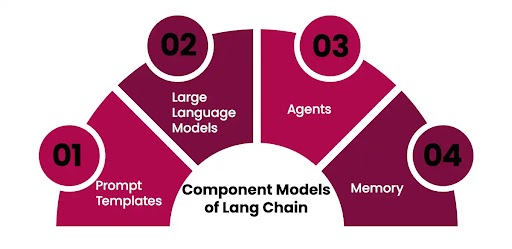

Component Models and Their Exalted Roles

Within the world of LangChain, each component model assumes mastery over a specific linguistic facet.

These facets include but are not confined to:

Prompt Templates

LangChain’s foundation is prompt templates that are predefined structures guiding the content generation process. It comes in a variety of formats such as models like “chatbot” style, ELI5 (Explain Like I’m 5) question-answering, and more. These frameworks give LLMs instructions on how to approach a language generation task. Think of them as blueprints that outline the structure and direction of a response or conversation.

LLMs (Large Language Models)

Due to LLMs like GPT-3, BLOOM, and others, LangChain stands where it does today. Not only do they have extensive knowledge about human language, but they also can generate coherent, contextually relevant text. LLMs are experts in linguistics that influence the LangChain with raw materials for content generation. Based on what was given by prompt templates, they comprehend and produce text that humans use.

Agents

Agents play an important role in the system called LangChain. They’re decision-makers who determine what steps to take during the content generation process. One thing they do includes consulting external tools such as web searches, calculators, or even accessing databases to gather additional information. Agents act as intelligent intermediaries who guide LLMs and contribute to the quality and accuracy of the content generated. They are responsible for making sure objectives and desired outcomes align with what is being produced.

Memory

Memory in this context consists of short-term and long-term memory. Short-term memory helps retain information that’s gathered within moments in the same conversation or task needed for generating content. This keeps text coherent and contextually relevant as it recalls immediate dialogue history when you respond or ask something mid-conversation. On the other hand, there’s long-term memory, which involves knowledge accumulated over time from different sources provided to these models, such as past interactions or information. When adapted, it contributes to conversations with more consistency and meaning.

The Symphonic Strategy of Sequencing

The sequential arrangement of these component models assumes utmost importance in guaranteeing the relevance and accuracy of the generated content. It resembles a meticulously orchestrated symphony, where each model builds upon the work of its predecessor. For instance, a LangChain might commence with a contextual model to grasp the input, followed by a grammar correction component to refine the text. The final touches may be added by a stylistic model to ensure that the output harmoniously aligns with the desired writing style. This strategic sequencing guarantees a harmonious and contextually appropriate outcome.

Real-World Applications and Advantages

LangChain boasts extensive applications across diverse domains. In content creation, it emerges as an invaluable tool for writers as it streamlines the writing process while enhancing the overall quality of produced content. Customer service chatbots stand to benefit immensely from LangChain's prowess, generating responses that are more human-like and contextually accurate, ultimately heightening user experiences.

Furthermore, LangChain finds its place in machine translation services, where it elevates both the quality and contextual relevance of translated texts. Automated report generation—an essential facet of business and data analysis—can also reap significant rewards from LangChain's incredible capabilities. In creative writing projects, it serves as an invaluable assistant in maintaining consistency in character dialogues and narrative style.

Challenges and an Optimistic Outlook

Despite its immense potential, LangChain does not exist without challenges. Sequentially integrating multiple models can prove computationally intensive, necessitating substantial computing resources. Additionally, achieving optimal synergy between component models and fine-tuning them for specific tasks and industries can be an intricate and time-consuming process.

However, with ongoing advancements in hardware capabilities and AI algorithms, these challenges are gradually becoming surmountable. The potential benefits, such as more accurate, contextually relevant, and human-like AI-generated content, remain compelling.

Conclusion

Generative AI development has seen a lot of advances, but nothing quite like LangChain. It’s an innovative force that breaks the barriers and limitations of LLMs. It is not a mere link in the chain but a transformative force that has the potential to reshape our interactions with AI-generated content. As it continues to evolve and become more accessible, LangChain is poised to play a pivotal role in enhancing the quality and context-awareness of AI-generated language, making it an indispensable cornerstone of next-generation AI applications. The journey is ongoing, yet the path ahead brims with undeniable promise—a tantalizing glimpse into a future where AI-generated content aligns flawlessly with human preferences: precise, contextually relevant, and harmoniously intertwined.

Opinions expressed by DZone contributors are their own.

Comments