Leveraging ML-Based Anomaly Detection for 4G Networks Optimization

How the latest technology is helping cellular providers to improve their services.

Join the DZone community and get the full member experience.

Join For FreeArtificial intelligence and machine learning already have some impressive use cases for industries like retail, banking, or transportation. While the technology is far from perfect, the advancements in ML allow other industries to benefit as well. In this article, we will look at our own research on how to make the operations of internet providers more effective.

Improving 4G Networks Traffic Distribution With Anomaly Detection

Previous generations of cellular networks were not very efficient with the distribution of network resources, providing coverage evenly for all territories all the time. As an example, you can envision a massive area with big cities, little towns, or forests that span for miles. All these areas are receiving the same amount of coverage — although cities and towns need more internet traffic, and forests require very little.

Considering the higher amount of traffic of modern 4G networks, cellular providers are able to achieve impressive energy savings and improve the experience of the customers by optimizing the utilization of frequency resources.

Machine learning-based anomaly detection allows predicting the traffic demand in various parts of the network, helping operators to distribute it more properly. This article is based on our analysis of the information from the public domain and implementing ML algorithms to effectively solve this problem with one of the possible approaches.

There are multiple solutions for this particular problem. The most interesting include:

Anomaly detection and classification in cellular networks using automatic labeling technique for applying supervised learning suitable for 2G/3G/4G/5G networks.

CellPAD is a unified performance anomaly detection framework for detecting performance anomalies in cellular networks via regression analysis.

Data Overview

The research was done with information extracted from the actual LTE Network. The dataset included 14 features in total, with 12 numerical and 2 categorical. We had almost 40,000 rows of data records with no missing values (empty rows). The data analytics team divided the information into two labeled classes:

Normal or 0: the data doesn’t need any reconfiguration or redistribution

Unusual or 1: where the reconfiguration is required due to abnormal activities

The labeling was executed manually based on the amount of traffic in particular parts of the network. However, there is an option for automated data labeling leveraging neural networks. Look up Amazon SageMaker Ground Truth for this function, or Data Labeling Service from Google’s AI Platform.

Data Analysis Results

Analysis of the labeled data showed us that the whole dataset was imbalanced. We had 26,271 normal (class 0) and 10,183 (class 1) unusual values:

According to the dataset, a Pearson correlation matrix was built:

4G networks utilization features correlation plot (Pearson)

As you can see, the big amount of features is heavily correlated. This correlation allows us to understand how different attributes in the dataset are connected to each other. It serves as a basic quantity for different modeling techniques and sometimes can help us to discover a casual relationship and predict one attribute based on the other.

This time we have perfectly positive and negative attributes, which may lead to the multicollinearity problem that will impact the performance of a model in a bad way. It happens when one predictor variable in a multi-regression model could be predicted linearly from any other variables highly accurately.

Lucky for us, decision trees and boosted trees are able to solve this issue by picking a single perfectly correlated feature at the moment of splitting. When using other models like logistic regression or linear regression, remember that they can experience this problem and need extra adjustment before training. Among other methods to deal with multicollinearity are principle component analysis (PCA) and the deletion of perfectly correlated features. The best option for us was the usage of tree-based algorithms because they don’t need any adjustments to deal with this problem.

Basic accuracy is one of the key metrics to measure classification, and it is the ratio of correct predictions to the total number of samples in the dataset. As was mentioned previously, we have imbalanced classes in our case, which means that basic accuracy can provide us with not correct results due to high metrics that don't show the prediction capacity for the minority class.

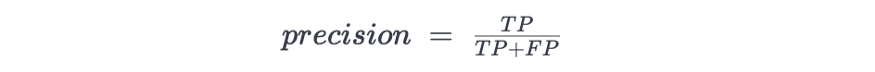

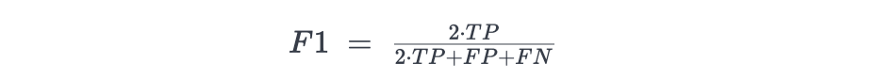

We can have accuracy close to 100% but still have low prediction capacity in a particular class because anomalies are the rarest in the dataset. Instead of accuracy, we decided to use the F1 metric, a harmonic average of precision and recall, which is great for imbalanced classification situations. F1 metrics cover the range from 0 to 1, where 0 is a total failure and 1 is a perfect classification.

The samples can be sorted in four ways:

- True Positive, TP — a positive label and a positive classification

- True Negative, TN — a negative label and a negative classification

- False Positive, FP — a negative label and a positive classification

- False Negative, FN — a positive label and a negative classification

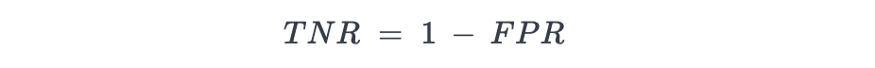

Here is what the metrics of the imbalanced classes look like:

True Positive Rate, Recall, or Sensitivity

False Positive Rate or Fall-out

False Positive Rate or Fall-out

Precision

Precision

True Negative Rate or Specificity

Algorithms of Our Choice

DecisionTreeClassifier was a great place to begin for us, as we got 94% accuracy on the test selection without any additional tweaks. To make our results even better, we moved to BaggingClassifier, also a tree algorithm that provided us with 96% accuracy according to the F1-score metric. We also tried RandomForestClassifier and GradientBoostingClassifier algorithms, which gave us 91% and 93% accuracy.

Feature Engineering Step

We achieved great results thanks to tree-based algorithms, but there was still some room to grow, so we decided to improve the accuracy further. While processing data, we added time features (minutes and hours), added the possibility to extract the part of the day from the “time” parameter, and tried the time lags feature — these moves didn’t help much. However, what did help to improve the outcomes of the model was the usage of upsampling techniques that allowed feature transformation and balancing of the data.

Parameters Tuning Step

All out-of-the-box algorithms showed results that exceeded 90%, which was great, but with the GridSearch technique, it was possible to improve them even further. Among four algorithms, GridSearch was the most effective for GradientBoostingClassifier and helped to achieve an astonishing 99% accuracy to complete our initial goal.

Conclusion

The issue we highlighted in this article is very common among all mobile internet providers that offer 3G or 4G coverage and can be dealt with to improve the experience of users. In this scenario, the “anomaly” is seen as a waste of internet traffic. Machine learning models can make a decision on the effectiveness of the distribution of resources based on input data. The described usage of GradientBoostingClassifier tuned with GridSearch can help companies to evaluate the efficiency of traffic distribution and advise them which parameters need to be changed in order to provide the best user experience.

Ineffective traffic utilization is not the only problem that data science can solve in the telecom industry. Solutions like fraud detection, predictive analytics, customer segmentation, churn prevention, and lifetime value prediction are also possible with the right team of developers.

Published at DZone with permission of Oleksandr Stefanovskyi. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments