Real-Time Stream Processing With Apache Kafka Part 2: Kafka Stream API

Get a deep dive into Kafka's Stream API.

Join the DZone community and get the full member experience.

Join For FreeThis is the second part of the four parts series of articles. In the previous article, we introduced you to Apache Kafka. In this article, we will briefly discuss Kafka APIs with special attention given to Kafka's Streams API.

Kafka Terminologies

Before we have a deep dive in Kafka streams, here's a quick refresher on important concepts in Kafka.

- Topic — A topic is a category or feed name that records are published to. Topics in Kafka are always multi-subscriber, which means a topic can have zero or many consumers that read data from it.

Publishers — Publishers publish data to Kafka brokers and consumer consumes data from brokers. Both producer and consumer are decoupled from each other and run outside of the broker.

Kafka Broker — A Kafka cluster consists of one or more servers (Kafka brokers), which are running Kafka. Producers are processes that publish data (push messages) into Kafka topics within the broker. A consumer of topics pulls messages off a Kafka topic.

Kafka Cluster — A Kafka cluster, sometimes referred to simply as a cluster, is a group of one or more Kafka brokers.

Data Data is stored in Kafka topics and every topic is split into one or more Partitions.

Kafka APIs

As of today, Kafka offers the following five core API:

Producer API: This API allows an application to publish a stream of records to one or more Kafka topics.

Consumer API: Consumer API allows applications to connect to one or more topics and process the records as they are pushed to those topics.

Streams API: This API allows the application to work as stream processors. The application can consume records from one or more topics and then process, transform, and produce the stream to one or more topics.

Connector API: Connector API allows for building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table.

AdminClient API: The AdminClient API allows for managing and inspecting topics, brokers, and other Kafka objects.

Kafka Streams API

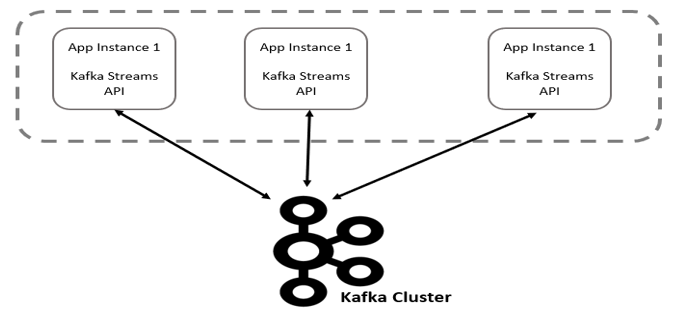

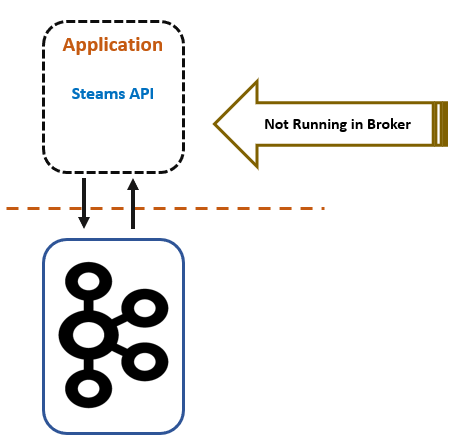

Kafka Streams API is a Java library that allows you to build real-time applications. These applications can be packaged, deployed, and monitored like any other Java application — there is no need to install separate processing clusters or similar special-purpose and expensive infrastructures!

The Streams API is scalable, lightweight, and fault-tolerant; it is stateless and allows for stateful processing.

Stream

Stream is a powerful abstraction provided by Kafka Streams. It represents an unbound, continuously updating the dataset. Just like a topic in Kafka, a stream in the Kafka Streams API consists of one or more stream partitions. A stream partition is an, ordered, replayable, and fault-tolerant sequence of immutable data records, where a data record is defined as a key-value pair.

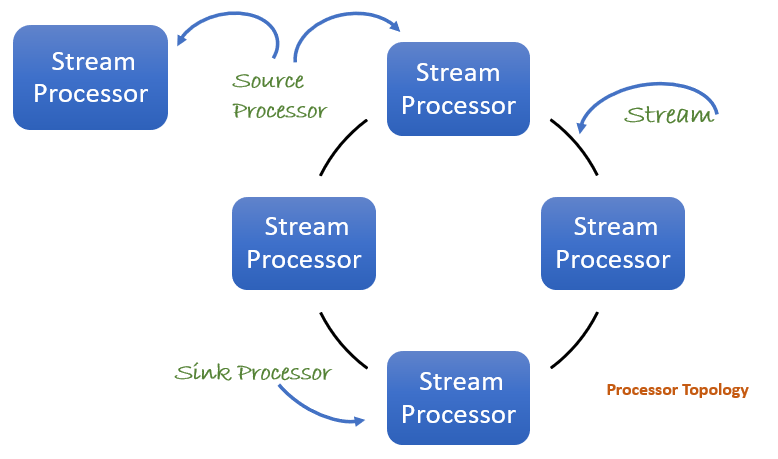

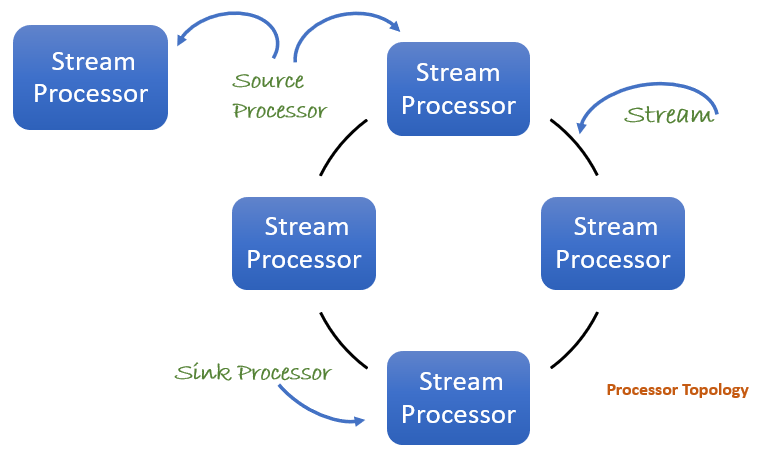

Processor Topology

A processor topology is a topology of the computational logic for the stream. A topology is a graph of stream processors (nodes) that are connected by streams (edges). A topology can be defined by using a high-level stream DSL or low-level Processor API.

Stream Application

A stream processing application is any program that uses the Kafka Streams library. In most cases, it’s the application created by you which can define computational logic in terms of processor topology. This application does not run inside a broker; it runs in its own separate JVM, possibly in a separate cluster altogether.

Stream Processor

The stream processors (represented as nodes) represents a processing step in a processor topology. One of the most common applications of a node is to transform data. Standard operations such as map, filter, and join are examples of stream processors that are available in Kafka Streams. We have two options to define stream processors:

Use high level Stream DSL provided by Kafka streams API.

For more fine-grain control and flexibility use processor API. Using this API, you can define and connect custom processors and directly interact with state stores.

There are two types of processors:

Source Processor: A source processor is a special type of stream processor that does not have any upstream processors. It produces an input stream to its topology from one or multiple Kafka topics by consuming records from these topics and forwarding them to down-stream processors.

Sink Processor: A sink processor is a special type of stream processor that does not have down-stream processors. It sends any received records from its up-stream processors to a specified Kafka topic.

Kafka Stream DSL Terminologies

Below are concepts you need to be familiar with. This will help in designing effective processor topology.

KStream

A KStream is an abstraction of a record stream, where each data record represents a self-contained datum in the unbounded data set. Data records are analogous to an INSERT. Think: adding more entries to an append-only ledger because no record replaces an existing row with the same key. These could be a credit card transaction, a page view event, or a server log entry.

KTable

A KTable is an abstraction of a changelog stream, where each data record represents an update. The value in a data record is interpreted as an UPDATE to the last value for the same record key. (If a corresponding key doesn't exist yet, the update will be considered an INSERT.) Using the table analogy, a data record in a changelog stream is interpreted as an UPSERT aka INSERT/UPDATE because any existing row with the same key is overwritten. Also, null values are interpreted in a special way: a record with a null value represents a DELETE or tombstone for the record's key.

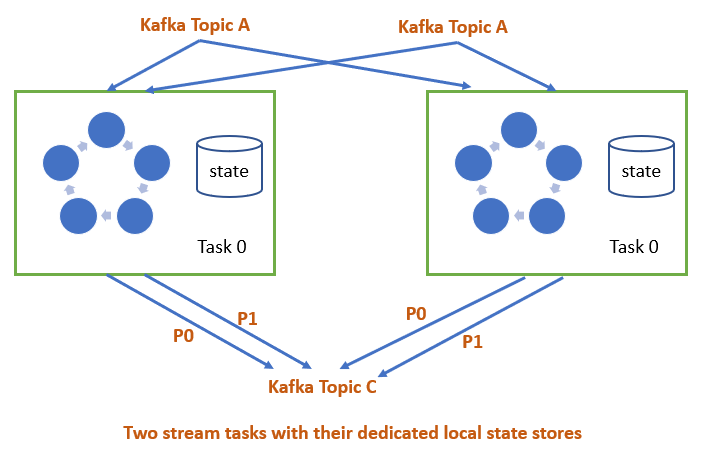

State Stores

Kafka Streams provides state stores, which can be used by stream processing applications to store and query data. This serves as an important capability when implementing stateful operations.

The Kafka Streams DSL, for example, automatically creates and manages such state stores when you are calling stateful operators, such as count() or aggregate(), or when you are windowing a stream.

Time

A critical aspect in stream processing is the notion of time. There are three notions of time in Kafka Streams. Let’s try to understand this with an example. Assume that there is a fleet management system that processes data emitted by a GPS signal emitter fitted in vehicles.

Event Time

This is the point in time when the record is emitted by the device fitted in vehicles.

Ingestion Time

The time when the emitted record is stored in Kafka topics by the broker. Depending on the data communication mechanism the Event time and Ingestion time will vary. In most cases, Ingestion-time is almost the same as event-time, as a timestamp gets embedded in the data record itself.

Processing Time

This is the point in time when the event or data record happens to be processed by the stream processing application (i.e. when the record is being consumed). The processing-time may be milliseconds, hours, or days later than the original event-time.

Transformations on Kafka Streams

The KTable and KStream interface support a variety of transformations. These transformations fall into one of the two following categories:

Stateful Transformations

Stateless transformations do not require a state for processing, and they do not require a state store associated with the stream processor. Kafka 0.11.0 and later allows you to materialize the result from a stateless KTable transformation. This allows the result to be queried through Interactive Queries.

To materialize a KTable, each of the stateless operations can be augmented with an optional queryableStoreName argument.

Stateless Transformations

Kafka Streams library offers several operations out of the box. Some of which are:

Aggregation — An aggregation operation takes one input stream or table and yields a new table by combining multiple input records into a single output record. Examples of aggregations are computing counts or sum.

Joins — A join operation merges two input streams and/or tables based on the keys of their data records and yields a new stream/table. The join operations available in the Kafka Streams DSL differ based on which kinds of streams and tables are being joined — for example, KStream-KStream joins versus KStream-KTable joins.

Windowing — Windowing lets you control how to group records that have the same key for stateful operations, such as aggregations or joins into so-called windows. Windows are tracked per record key.

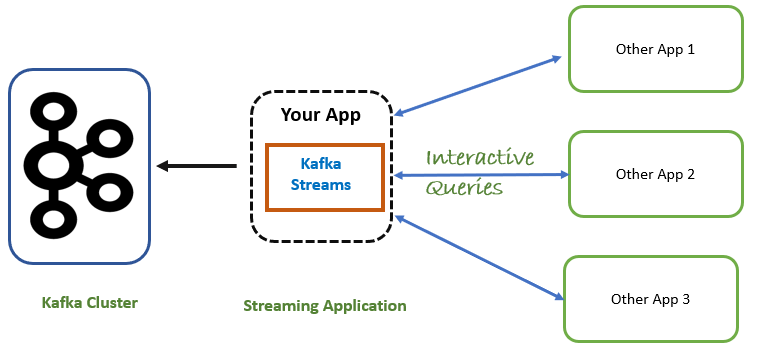

Interactive Queries — Interactive queries allow you to treat the stream processing layer as a lightweight embedded database and to directly query the latest state of your stream processing application. You can do this without having to first materialize that state to external databases or external storage.

Benefits of Interactive Queries

Real-time monitoring — A front-end dashboard that provides threat intelligence. (E.g., web servers currently under attack by cybercriminals can directly query a Kafka Streams application that continuously generates the relevant information by processing network telemetry data in real-time.)

Risk and fraud detection — A Kafka Streams application continuously analyzes user transactions for anomalies and suspicious behavior. An online banking application can directly query the Kafka Streams application when a user logs in to deny access to those users that have been flagged as suspicious.

Trend detection — A Kafka Streams application continuously computes the latest top charts across music genres based on user listening behavior that is collected in real-time. Mobile or desktop applications of a music store can then interactively query for the latest charts while users are browsing the store.

Conclusion

In this article, we have discussed Kafka Stream API. In the next article, we will set up a single node Kafka cluster on a Windows machine.

Please share any valuable feedback/questions you might have!

Opinions expressed by DZone contributors are their own.

Comments