SQL Loader + Unix Script: Loading Multiple Data Files in Oracle DB Table

Explore the power of SQL Loader + Unix Script utility where multiple data files can be loaded by the SQL loader with automated shell scripts.

Join the DZone community and get the full member experience.

Join For FreeHere, I am going to show the power of SQL Loader + Unix Script utility, where multiple data files can be loaded by the SQL loader with automated shell scripts. This would be useful while dealing with large chunks of data and when data needs to be moved from one system to another system.

It would be suitable for a migration project where large historical data is involved. Then, it is not possible to run the SQL loader for each file and wait till it's loaded. So the best option is to keep the Unix program containing the SQL loader command running all the time. Once any file is available in the folder location then it will pick up the files from that folder location and start processing immediately.

The Set Up

The sample program I have done in Macbook. Installation of Oracle differs from one from Windows machine.

Please go through the video that contains the detailed steps of how to install Oracle on Mac book.

Get the SQL developer with Java 8 compliance.

Now let us demonstrate the example.

Loading Multiple Data Files in Oracle DB Table

Because it is a Macbook, I have to do all the stuff inside the Oracle Virtual Machine.

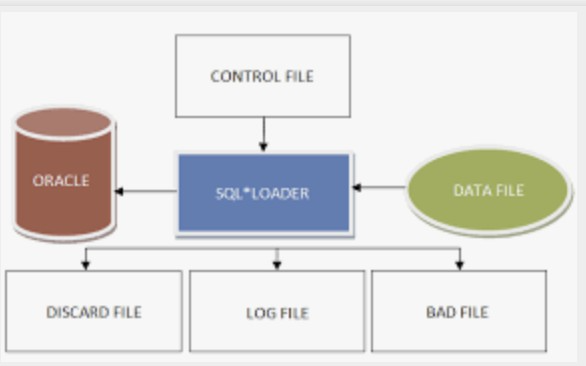

Let's see the below diagram of how SQL Loader works.

Use Case

We need to load millions of students' information onto to Student Table using shell scripts + SQL Loader Automation. The script will run all the time in the Unix server and poll for the .Dat file, and once the DAT file is in place, it will process them.

Also in case any bad data is there, you need to identify them separately.

This type of example is useful in a migration project, where need to load millions of historical records.

- From the old system, a live Feed (DAT file ) will be generated periodically and sent to the new system server.

- In the new system, the server file is available and will be loaded into the database using the automation Unix script.

- Now let's run the script. The script can run all the time on a Unix server. To achieve this, the whole code is put into the block below:

while true

[some logic]

doneThe Process

1. I have copied all the files + folder structure in the folder below.

/home/oracle/Desktop/example-SQLdr

2. Refer to the below file (ls -lrth):

rwxr-xr-x. 1 oracle oinstall 147 Jul 23 2022 student.ctl

-rwxr-xr-x. 1 oracle oinstall 53 Jul 23 2022 student_2.dat

-rwxr-xr-x. 1 oracle oinstall 278 Dec 9 12:42 student_1.dat

drwxr-xr-x. 2 oracle oinstall 48 Dec 24 09:46 BAD

-rwxr-xr-x. 1 oracle oinstall 1.1K Dec 24 10:10 TestSqlLoader.sh

drwxr-xr-x. 2 oracle oinstall 27 Dec 24 11:33 DISCARD

-rw-------. 1 oracle oinstall 3.5K Dec 24 11:33 nohup.out

drwxr-xr-x. 2 oracle oinstall 4.0K Dec 24 11:33 TASKLOG

-rwxr-xr-x. 1 oracle oinstall 0 Dec 24 12:25 all_data_file_list.unx

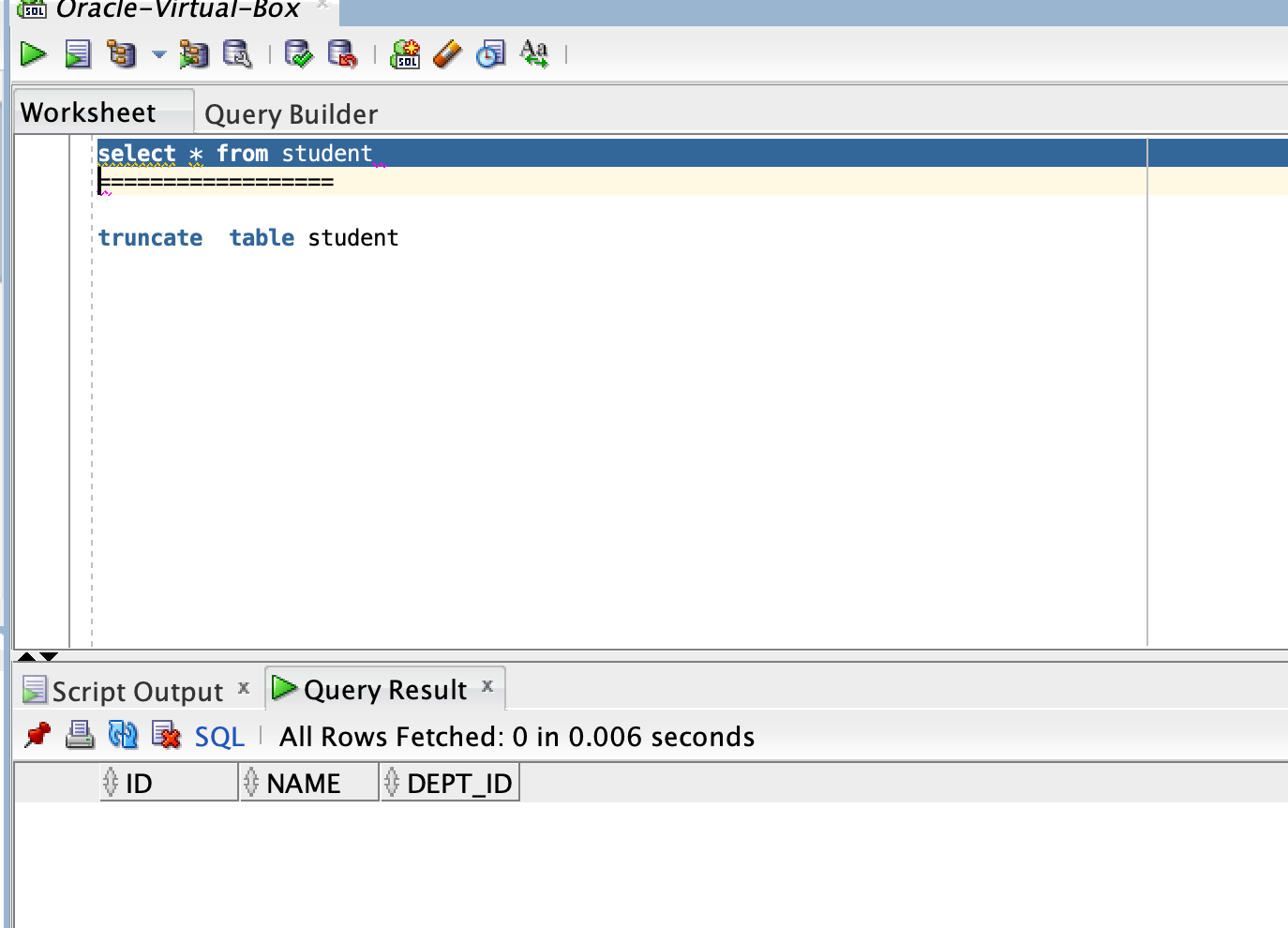

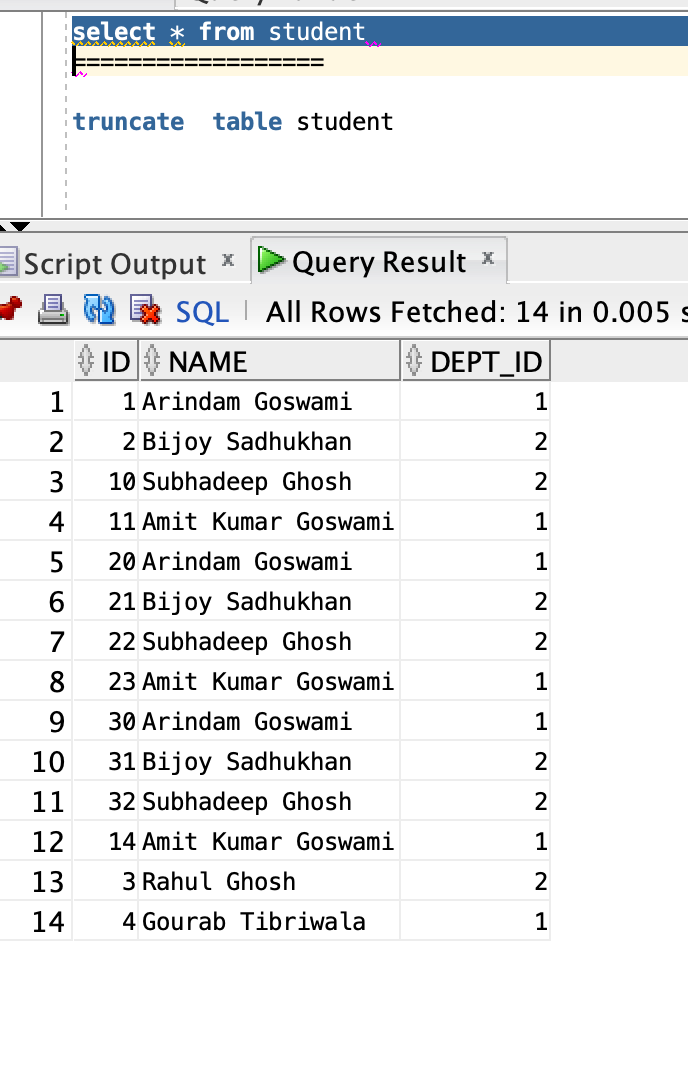

drwxr-xr-x. 2 oracle oinstall 6 Dec 24 12:29 ARCHIVE3. As shown below, there is no data in the student table.

4. Now run the script using the nohup.out ./TestSqlLoader.sh. By doing this it will run all the time in the Unix server.

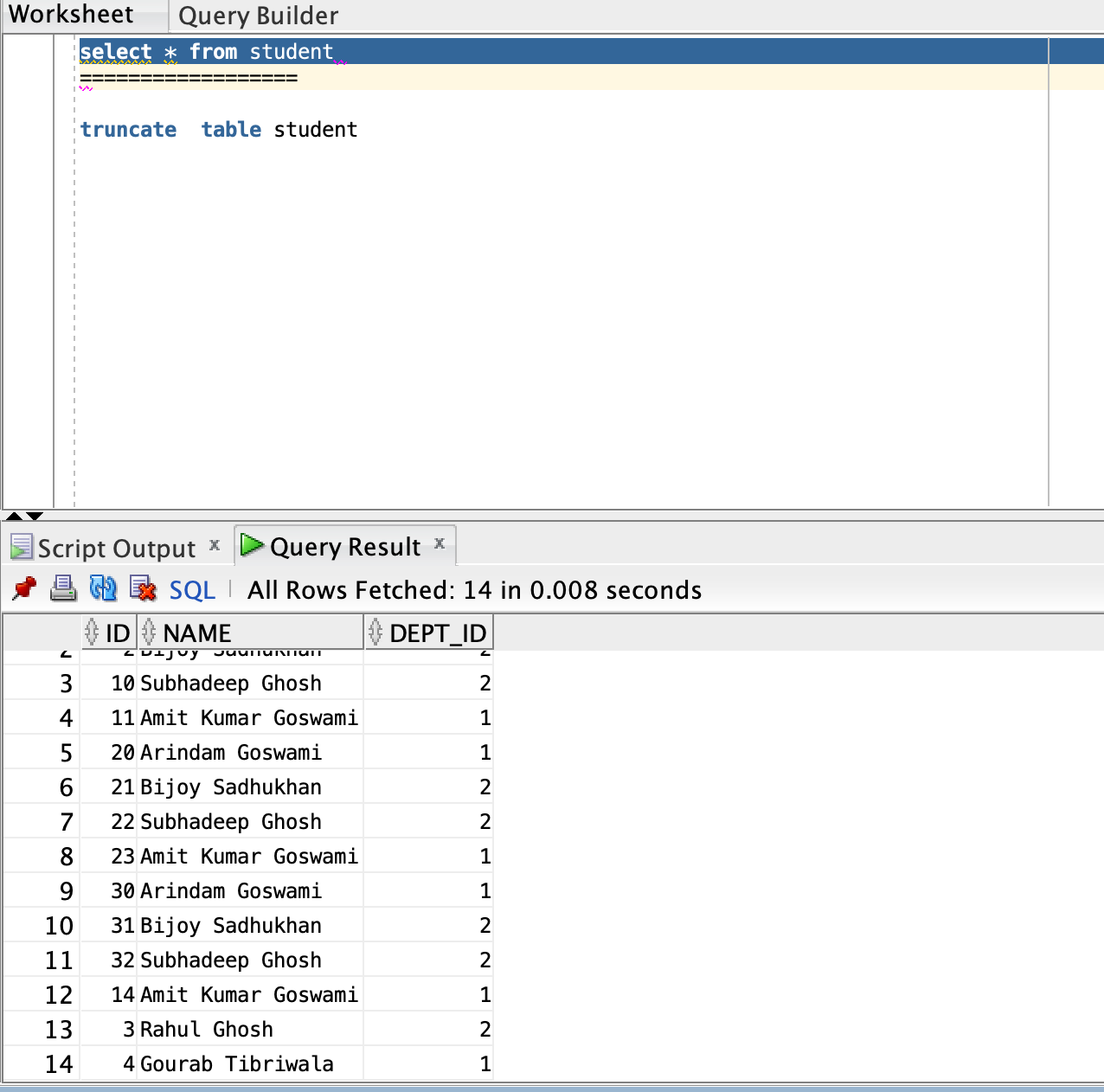

5. Now the script will run, which will load the two .dat files through the SQL loader.

6. The table should be loaded with the content of two files.

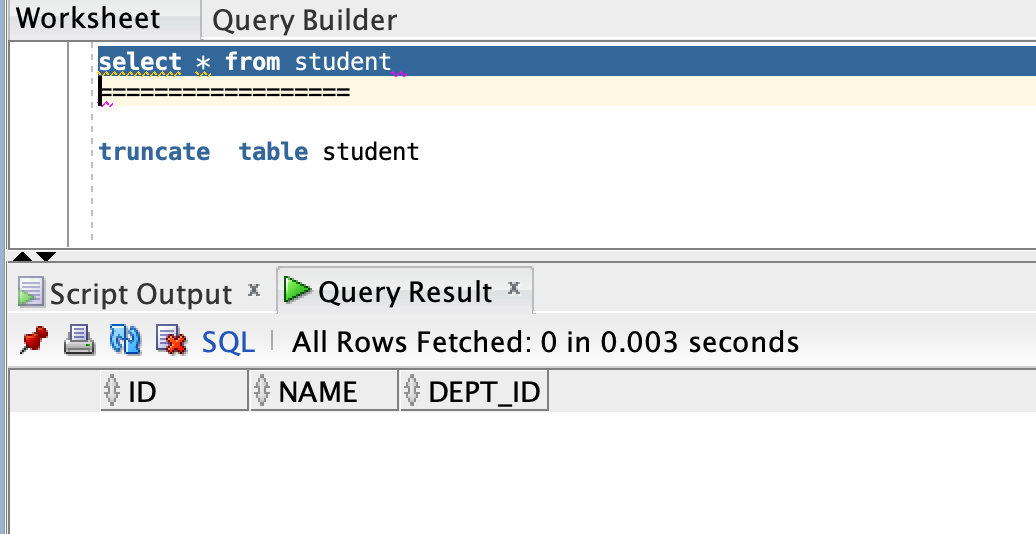

7. Now I am again deleting the table data. Just to prove the script is running all the time in the server, I will just place two DAT files from ARCHIVE to the current Directory.

8. Again place the two data files in the current directory.

-rwxr-xr-x. 1 oracle oinstall 147 Jul 23 2022 student.ctl

-rwxr-xr-x. 1 oracle oinstall 53 Jul 23 2022 student_2.dat

-rwxr-xr-x. 1 oracle oinstall 278 Dec 9 12:42 student_1.dat

drwxr-xr-x. 2 oracle oinstall 48 Dec 24 09:46 BAD

-rwxr-xr-x. 1 oracle oinstall 1.1K Dec 24 10:10 TestSqlLoader.sh

drwxr-xr-x. 2 oracle oinstall 27 Dec 24 12:53 DISCARD

-rw-------. 1 oracle oinstall 4.3K Dec 24 12:53 nohup.out

drwxr-xr-x. 2 oracle oinstall 4.0K Dec 24 12:53 TASKLOG

-rwxr-xr-x. 1 oracle oinstall 0 Dec 24 13:02 all_data_file_list.unx

drwxr-xr-x. 2 oracle oinstall 6 Dec 24 13:03 ARCHIVE9. See the student table again has loaded with all the data.

10. The script is running all the time on the server:

10. The script is running all the time on the server:

[oracle@localhost example-sqldr]$ ps -ef|grep Test

oracle 30203 1 0 12:53? 00:00:00 /bin/bash ./TestSqlLoader.sh

oracle 31284 31227 0 13:06 pts/1 00:00:00 grep --color=auto TestFull Source Code for Reference

#!/bin/bash

bad_ext='.bad'

dis_ext='.dis'

data_ext='.dat'

log_ext='.log'

log_folder='TASKLOG'

arch_loc="ARCHIVE"

bad_loc="BAD"

discard_loc="DISCARD"

now=$(date +"%Y.%m.%d-%H.%M.%S")

log_file_name="$log_folder/TestSQLLoader_$now$log_ext"

while true;

do

ls -a *.dat 2>/dev/null > all_data_file_list.unx

for i in `cat all_data_file_list.unx`

do

#echo "The data file name is :-- $i"

data_file_name=`basename $i .dat`

echo "Before executing the sql loader command ||Starting of the script" > $log_file_name

sqlldr userid=hr/oracle@orcl control=student.ctl errors=15000 log=$i$log_ext bindsize=512000000 readsize=500000 DATA=$data_file_name$data_ext BAD=$data_file_name$bad_ext DISCARD=$data_file_name$dis_ext

mv $data_file_name$data_ext $arch_loc 2>/dev/null

mv $data_file_name$bad_ext $bad_loc 2>/dev/null

mv $data_file_name$dis_ext $discard_loc 2>/dev/null

mv $data_file_name$data_ext$log_ext $log_folder 2>/dev/null

echo "After Executing the sql loader command||File moved successfully" >> $log_file_name

done

## halt the procesing for 2 mins

sleep 1m

doneThe CTL file is below.

OPTIONS (SKIP=1)

LOAD DATA

APPEND

INTO TABLE student

FIELDS TERMINATED BY '|' OPTIONALLY ENCLOSED BY '"'

TRAILING NULLCOLS

(

id,

name,

dept_id

)

The SQL Loader Specification

control- Name of the .ctl fileerrors=15000- Maximum number of errors SQL Loader can allowlog=$i$log_ext- Name of the log filebindsize=512000000- Max size of bind arrayreadsize=500000- Max size of the read bufferDATA=$data_file_name$data_ext- Name and location of data fileBAD=$data_file_name$bad_ext- Name and location of bad fileDISCARD=$data_file_name$dis_ext- Name and location of discard file

In this way stated above, millions of records can be loaded through SQL loader + Unix Script automated way, and the above parameter can be set according to the need.

Please let me know if you like this article.

Opinions expressed by DZone contributors are their own.

Comments